Published -

May 17, 2025

Ever wondered why you can't explain that perfect sunset to your friend in a way that captures exactly how it made you feel? Or why learning a new language feels like rewiring your brain? Or even why AI sometimes gives you answers that seem almost human but miss something essential?

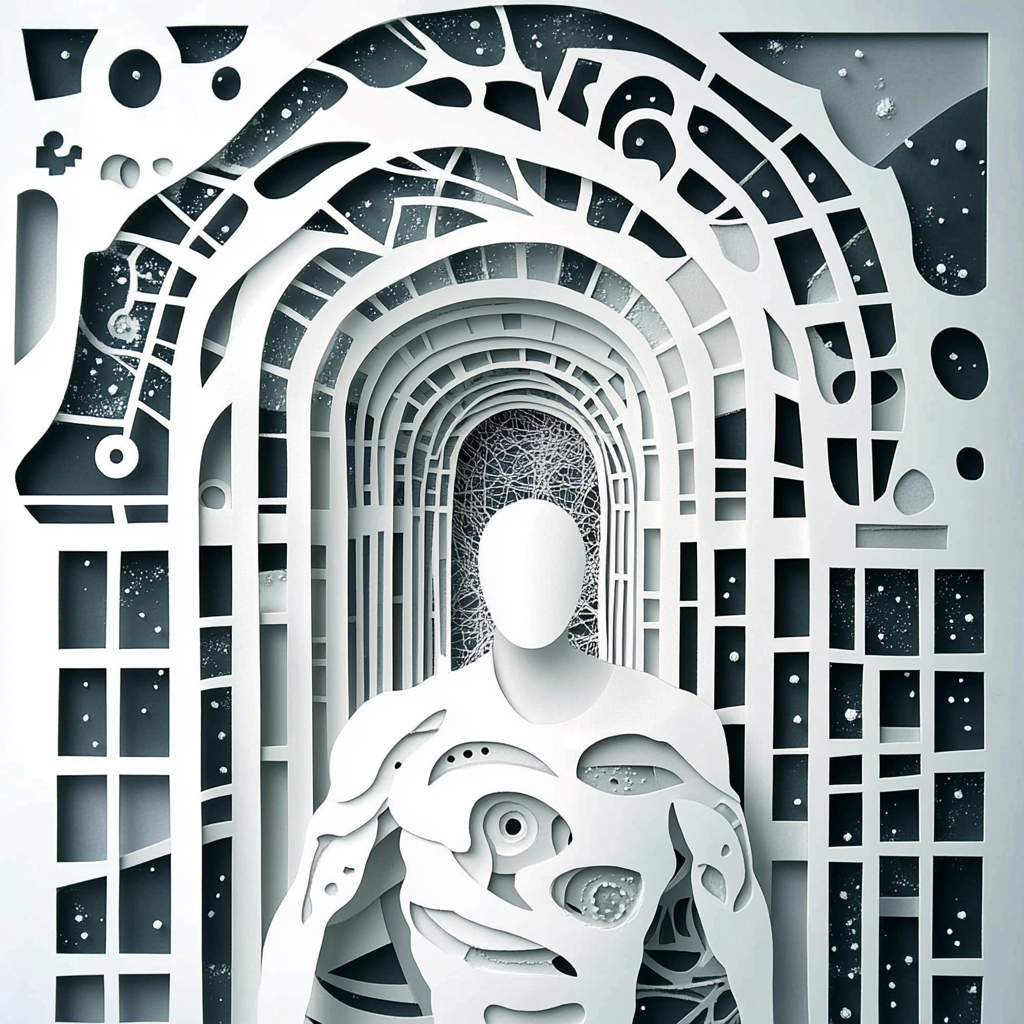

It turns out there might be a single mathematical framework that explains all of this, and it has to do with compression algorithms. Yes, the same basic concept that squeezes your vacation photos into smaller files might be the key to understanding consciousness itself.

Let's start with an uncomfortable truth: reality contains virtually infinite information, but your brain has limited processing power. It's like trying to stream an 8K movie on a dial-up connection, something's gotta give.

What's a brain to do? The same thing computers do when facing too much data: compress the heck out of it.

In my recently published paper, "The Eigendecomposition Framework," I propose that language and cognition evolved precisely as compression techniques for reality. They allow us to efficiently store, process, and communicate information with our limited neural hardware.

At a mathematical level, our compression works something like eigendecomposition or singular value decomposition (SVD). Don't run away...I promise this is about to get interesting!

Imagine reality as a gigantic spreadsheet with an infinite number of rows and columns. Your brain performs a mathematical trick that finds the "most important" patterns in this spreadsheet and keeps only those, discarding less significant information.

It's like if you had to describe a person with just three words instead of a detailed biography. You'd pick the most distinctive features: "tall, cheerful, mustached" rather than "has exactly 127,431 hairs and breathes approximately 23,000 times per day."

When we communicate, we're essentially trying to transfer our compressed version of reality to someone else's brain. Language is the tool we've evolved for this data transfer.

But here's the catch: all compression creates artifacts.

Ever seen a heavily compressed JPEG image where blocks appear that weren't in the original? Language does the same thing to reality. When you compress the continuous color spectrum into discrete words like "red," "blue," and "green," you create the illusion of sharp boundaries where none exist in reality.

This explains why some languages have four basic color terms while others have twelve...they're just using different compression settings!

Remember the Sapir-Whorf hypothesis from your Linguistics 101 class? The idea that language shapes thought? This framework gives it a mathematical foundation.

Different languages represent different eigendecompositions of experiential space. They're optimized for different environments and cultural contexts.

Take spatial terms:

This means Tzeltal dedicates more "compression bandwidth" to spatial relationships than English does. It's like English compressed space at 144p while Tzeltal is working with 4K resolution, but might compress other aspects of reality more aggressively to compensate.

When you habitually use a particular compression scheme, it affects how you perceive and categorize reality even in non-linguistic tasks. It's not that you can't perceive what your language doesn't name...it's just computationally more expensive.

Ever struggled to explain a concept from your native language that just doesn't translate well? Like the Danish "hygge" or German "Schadenfreude"?

Now we can understand this mathematically:

It's like trying to open a JPEG with a program designed for PNG files, something's going to look weird.

Here's where it gets really wild: what if consciousness itself is just a higher-order compression process?

Your brain compresses sensory input into neuronal activations, then compresses those into concepts and categories, and finally compresses those into a unified conscious experience.

Consciousness, in this view, is a function that creates a coherent model from the outputs of lower-level compressions:

Consciousness(t) = Compress(Senses(t), Thoughts(t), Memories(t), Consciousness(t-1))

This explains key features of consciousness:

The "hard problem" of consciousness transforms into a computational question: how does a system implement a compression algorithm that creates a subjective perspective on its own operations?

This framework also helps explain why AI sometimes feels so alien despite being increasingly sophisticated.

Modern AI systems are implementing their own forms of dimensionality reduction:

But they compress reality differently than humans do:

No wonder communication between humans and AI faces fundamental compression misalignments! It explains AI hallucinations (errors in reconstructing compressed information) and struggles with nuance and implicit information.

Beyond the philosophical mind-bending, this framework has practical applications:

At its most speculative, this framework invites us to reconsider the nature of reality itself. What we perceive as reality is always a reconstruction from compressed representations.

Different compression schemes, whether from different languages, different species, or different forms of intelligence, generate diverse ways of experiencing and understanding the world.

None is complete, but each offers a unique window into the infinite information density of existence. Together, they create a richer picture than any single compression algorithm could achieve alone.

So the next time you struggle to find the right words, remember: you're not failing at language, you're just bumping up against the limitations of compression. And that's not just okay; it's the inevitable consequence of being a finite intelligence in an infinite universe.